- Prerequisites

- Add

hadoophadoop user with passwordhadoop - Configure passwordless SSH

- Download Hadoop

- Setting up the environment variable

- Configure Hadoop

- Configure

mapred-site.xml - Format HDFS NameNode

- Start the Hadoop Cluster

- Check if all components works correctly

- Stop the Hadoop components

Prerequisites

Update and upgrade your system:

|

1 2 |

nosql@nosql:~$ apt-get update -y nosql@nosql:~$ apt-get upgrade -y |

To install Hadoop you need Java.

|

1 2 3 4 5 |

nosql@nosql:~$ apt-get install default-jdk default-jre -y nosql@nosql:~$ java -version openjdk version "11.0.12" 2021-07-20 OpenJDK Runtime Environment (build 11.0.12+7-Ubuntu-0ubuntu3) OpenJDK 64-Bit Server VM (build 11.0.12+7-Ubuntu-0ubuntu3, mixed mode, sharing) |

You need also both the OpenSSH Server and OpenSSH Client package. Install them with this command:

|

1 |

nosql@nosql:~$ sudo apt install openssh-server openssh-client |

Add

hadoop hadoop user with password hadoop|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

nosql@nosql:~$ sudo adduser hadoop [sudo] password for wmii: Adding user `hadoop' ... Adding new group `hadoop' (1001) ... Adding new user `hadoop' (1001) with group `hadoop' ... Creating home directory `/home/hadoop' ... Copying files from `/etc/skel' ... Enter new UNIX password: Retype new UNIX password: passwd: password updated successfully Changing the user information for hadoop Enter the new value, or press ENTER for the default Full Name []: Room Number []: Work Phone []: Home Phone []: Other []: Is the information correct? [Y/n] nosql@nosql:~$ sudo usermod -aG sudo hadoop [sudo] password for nosql: nosql@nosql:~$ |

Configure passwordless SSH

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

[code:003] nosql@nosql:~$ su hadoop Password: hadoop@nosql:/home/nosql$ ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Created directory '/home/hadoop/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/hadoop/.ssh/id_rsa Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub The key fingerprint is: SHA256:1gihCHzkG1RzVVVAoip6R1RsJhHFxwXwPi94hXEN3dw hadoop@nosql The key's randomart image is: +---[RSA 3072]----+ |o .o.o.+B=++=*+oo| | ooo .oo.=oo. o.E| | oo. ..+..o . . | | o ...o. + | | . . oS .+ . | | . o. . + | | . . . . o . | | . . . . | | | +----[SHA256]-----+ hadoop@nosql:/home/nosql$ cat ~/.s .ssh/ .sudo_as_admin_successful hadoop@nosql:/home/nosql$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys hadoop@nosql:/home/nosql$ chmod 0600 ~/.ssh/authorized_keys hadoop@nosql:/home/nosql$ ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. ECDSA key fingerprint is SHA256:KdXNRol440EMnbFjffvxbQghjBZsaJF9/8WdQ6x3sIw. Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts. Welcome to Ubuntu 21.10 (GNU/Linux 5.13.0-21-generic x86_64) * Documentation: https://help.ubuntu.com * Management: https://landscape.canonical.com * Support: https://ubuntu.com/advantage The programs included with the Ubuntu system are free software; the exact distribution terms for each program are described in the individual files in /usr/share/doc/*/copyright. Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by applicable law. hadoop@nosql:~$ exit wylogowanie Connection to localhost closed. |

Download Hadoop

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 |

hadoop@nosql:/home/nosql$ cd ~ hadoop@nosql:~$ pwd /home/hadoop hadoop@nosql:~$ wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.1/hadoop-3.3.1.tar.gz --2021-11-25 13:33:17-- https://dlcdn.apache.org/hadoop/common/hadoop-3.3.1/hadoop-3.3.1.tar.gz Translacja dlcdn.apache.org (dlcdn.apache.org)... 151.101.2.132, 2a04:4e42::644 Łączenie się z dlcdn.apache.org (dlcdn.apache.org)|151.101.2.132|:443... połączono. Żądanie HTTP wysłano, oczekiwanie na odpowiedź... 200 OK Długość: 605187279 (577M) [application/x-gzip] Zapis do: ‘hadoop-3.3.1.tar.gz’ hadoop-3.3.1.tar.gz 100%[======================>] 577,15M 10,1MB/s w 53s 2021-11-25 13:34:10 (10,9 MB/s) - zapisano ‘hadoop-3.3.1.tar.gz’ [605187279/605187279] hadoop@nosql:~$ tar -zxvf hadoop-3.3.1.tar.gz [... CUT ...] hadoop@nosql:~$ ls -l razem 591012 drwxr-xr-x 10 hadoop hadoop 4096 cze 15 07:52 hadoop-3.3.1 -rw-rw-r-- 1 hadoop hadoop 605187279 cze 15 11:55 hadoop-3.3.1.tar.gz hadoop@nosql:~$ rm hadoop-3.3.1.tar.gz hadoop@nosql:~$ ls -l razem 4 drwxr-xr-x 10 hadoop hadoop 4096 cze 15 07:52 hadoop-3.3.1 hadoop@nosql:~$ sudo mv hadoop-3.3.1 /usr/local/hadoop [sudo] password for hadoop: hadoop@nosql:~$ sudo mkdir /usr/local/hadoop/logs hadoop@nosql:~$ ls -l /usr/local/hadoop/ razem 116 drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 bin drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:15 etc drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 include drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:52 lib drwxr-xr-x 4 hadoop hadoop 4096 cze 15 07:52 libexec -rw-rw-r-- 1 hadoop hadoop 23450 cze 15 07:02 LICENSE-binary drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 licenses-binary -rw-rw-r-- 1 hadoop hadoop 15217 cze 15 07:02 LICENSE.txt drwxr-xr-x 2 root root 4096 lis 25 13:39 logs -rw-rw-r-- 1 hadoop hadoop 29473 cze 15 07:02 NOTICE-binary -rw-rw-r-- 1 hadoop hadoop 1541 maj 21 2021 NOTICE.txt -rw-rw-r-- 1 hadoop hadoop 175 maj 21 2021 README.txt drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:15 sbin drwxr-xr-x 4 hadoop hadoop 4096 cze 15 08:18 share hadoop@nosql:~$ ls -l /usr/local/ | grep hadoop drwxr-xr-x 11 hadoop hadoop 4096 lis 25 13:39 hadoop hadoop@nosql:~$ sudo chown -R hadoop:hadoop /usr/local/hadoop/ hadoop@nosql:~$ ls -l /usr/local/ | grep hadoop drwxr-xr-x 11 hadoop hadoop 4096 lis 25 13:39 hadoop hadoop@nosql:~$ ls -l /usr/local/hadoop/ razem 116 drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 bin drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:15 etc drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 include drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:52 lib drwxr-xr-x 4 hadoop hadoop 4096 cze 15 07:52 libexec -rw-rw-r-- 1 hadoop hadoop 23450 cze 15 07:02 LICENSE-binary drwxr-xr-x 2 hadoop hadoop 4096 cze 15 07:52 licenses-binary -rw-rw-r-- 1 hadoop hadoop 15217 cze 15 07:02 LICENSE.txt drwxr-xr-x 2 hadoop hadoop 4096 lis 25 13:39 logs -rw-rw-r-- 1 hadoop hadoop 29473 cze 15 07:02 NOTICE-binary -rw-rw-r-- 1 hadoop hadoop 1541 maj 21 2021 NOTICE.txt -rw-rw-r-- 1 hadoop hadoop 175 maj 21 2021 README.txt drwxr-xr-x 3 hadoop hadoop 4096 cze 15 07:15 sbin drwxr-xr-x 4 hadoop hadoop 4096 cze 15 08:18 share |

Setting up the environment variable

Now you worka as a normal user.

Run text editor

|

1 |

nosql@nosql:~$ nano ~/.bashrc |

and paste

|

1 2 3 4 5 6 7 8 9 |

export HADOOP_HOME=/usr/local/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native" |

Activate the environment variables with the following command:

|

1 |

nosql@nosql:~$ source ~/.bashrc |

Configure Hadoop

Setting up the Java environment variable

|

1 2 3 4 |

nosql@nosql:~$ which javac /usr/bin/javac nosql@nosql:~$ readlink -f /usr/bin/javac /usr/lib/jvm/java-11-openjdk-amd64/bin/javac |

Run text editor

|

1 2 |

nosql@nosql:~$ sudo nano $HADOOP_HOME/etc/hadoop/hadoop-env.sh [sudo] password for nosql: |

and paste at the end of file:

|

1 2 |

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64 export HADOOP_CLASSPATH+=" $HADOOP_HOME/lib/*.jar" |

|

1 2 3 4 5 6 7 |

nosql@nosql:~$ hadoop version Hadoop 3.3.1 Source code repository https://github.com/apache/hadoop.git -r a3b9c37a397ad4188041dd80621bdeefc46885f2 Compiled by ubuntu on 2021-06-15T05:13Z Compiled with protoc 3.7.1 From source with checksum 88a4ddb2299aca054416d6b7f81ca55 This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.1.jar |

Configure

core-site.xmlRun text editor:

|

1 |

nosql@nosql:~$ sudo nano $HADOOP_HOME/etc/hadoop/core-site.xml |

and add to configuration section the following code:

|

1 2 3 4 5 |

<property> <name>fs.defaultFS</name> <value>hdfs://localhost:9000</value> <description>The default file system URI</description> </property> |

Configure

hdfs-site.xmlRun text editor:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

nosql@nosql:~$ sudo ls -l /home/hadoop/ razem 0 nosql@nosql:~$ sudo mkdir -p /home/hadoop/hdfs/{namenode,datanode} nosql@nosql:~$ sudo ls -l /home/hadoop/ razem 4 drwxr-xr-x 4 root root 4096 lis 25 14:07 hdfs nosql@nosql:~$ sudo ls -l /home/hadoop/hdfs razem 8 drwxr-xr-x 2 root root 4096 lis 25 14:07 datanode drwxr-xr-x 2 root root 4096 lis 25 14:07 namenode nosql@nosql:~$ sudo chown -R hadoop:hadoop /home/hadoop/hdfs nosql@nosql:~$ sudo ls -l /home/hadoop/ razem 4 drwxr-xr-x 4 hadoop hadoop 4096 lis 25 14:07 hdfs nosql@nosql:~$ sudo ls -l /home/hadoop/hdfs razem 8 drwxr-xr-x 2 hadoop hadoop 4096 lis 25 14:07 datanode drwxr-xr-x 2 hadoop hadoop 4096 lis 25 14:07 namenode |

add to configuration section the following code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

<property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.name.dir</name> <value>file:///home/hadoop/hdfs/namenode</value> </property> <property> <name>dfs.data.dir</name> <value>file:///home/hadoop/hdfs/datanode</value> </property> |

Configure

mapred-site.xmlRun text editor:

|

1 |

nosql@nosql:~$ sudo nano $HADOOP_HOME/etc/hadoop/mapred-site.xml |

add to configuration section the following code:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value> </property> |

Configure

yarn-site.xmlRun text editor:

|

1 |

nosql@nosql:~$ sudo nano $HADOOP_HOME/etc/hadoop/yarn-site.xml |

add to configuration section the following code:

|

1 2 3 4 |

<property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> |

Format HDFS NameNode

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

hadoop@nosql:~$ pwd /home/hadoop hadoop@nosql:~$ sudo cp /home/nosql/.bashrc /home/hadoop/ [sudo] hasło użytkownika hadoop: hadoop@nosql:~$ ls -la razem 52 drwxr-x--- 6 hadoop hadoop 4096 lis 25 14:07 . drwxr-xr-x 4 root root 4096 lis 25 13:17 .. -rw------- 1 hadoop hadoop 5 lis 25 13:25 .bash_history -rw-r--r-- 1 hadoop hadoop 220 lis 25 13:17 .bash_logout -rw-r--r-- 1 hadoop hadoop 4167 lis 25 14:21 .bashrc drwx------ 2 hadoop hadoop 4096 lis 25 13:25 .cache drwxr-xr-x 6 hadoop hadoop 4096 lis 25 13:25 .config drwxr-xr-x 4 hadoop hadoop 4096 lis 25 14:07 hdfs -rw-r--r-- 1 hadoop hadoop 807 lis 25 13:17 .profile drwx------ 2 hadoop hadoop 4096 lis 25 13:25 .ssh -rw-r--r-- 1 hadoop hadoop 0 lis 25 13:20 .sudo_as_admin_successful -rw-r--r-- 1 hadoop hadoop 1600 lis 25 13:17 .Xdefaults -rw-r--r-- 1 hadoop hadoop 14 lis 25 13:17 .xscreensaver hadoop@nosql:~$ source ~/.bashrc hadoop@nosql:~$ hdfs namenode -format [... CUT ...] /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at nosql/127.0.1.1 ************************************************************/ |

Start the Hadoop Cluster

|

1 2 3 4 5 6 7 8 |

hadoop@nosql:~$ start-dfs.sh Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [nosql] nosql: Warning: Permanently added 'nosql' (ECDSA) to the list of known hosts. hadoop@nosql:~$ start-yarn.sh Starting resourcemanager Starting nodemanagers |

Check if all components works correctly

Check Hadoop components

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

hadoop@nosql:~$ jps 5521 Jps 4596 NameNode 4870 SecondaryNameNode 5096 ResourceManager 5196 NodeManager 4702 DataNode hadoop@nosql:~$ hadoop version Hadoop 3.3.1 Source code repository https://github.com/apache/hadoop.git -r a3b9c37a397ad4188041dd80621bdeefc46885f2 Compiled by ubuntu on 2021-06-15T05:13Z Compiled with protoc 3.7.1 From source with checksum 88a4ddb2299aca054416d6b7f81ca55 This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.1.jar hadoop@nosql:~$ hdfs version Hadoop 3.3.1 Source code repository https://github.com/apache/hadoop.git -r a3b9c37a397ad4188041dd80621bdeefc46885f2 Compiled by ubuntu on 2021-06-15T05:13Z Compiled with protoc 3.7.1 From source with checksum 88a4ddb2299aca054416d6b7f81ca55 This command was run using /usr/local/hadoop/share/hadoop/common/hadoop-common-3.3.1.jar |

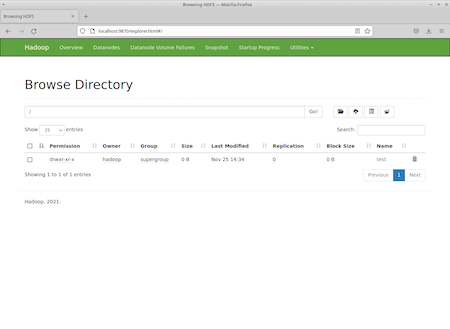

Check HDFS

|

1 2 3 4 5 |

hadoop@nosql:~$ hdfs dfs -ls / hadoop@nosql:~$ hdfs dfs -mkdir /test hadoop@nosql:~$ hdfs dfs -ls / Found 1 items drwxr-xr-x - hadoop supergroup 0 2021-11-25 14:34 /test |

But nosql user is not allowed to do any action on HDFS:

|

1 2 3 4 5 |

nosql@nosql:~$ hdfs dfs -ls / Found 1 items drwxr-xr-x - hadoop supergroup 0 2021-11-25 14:34 /test nosql@nosql:~$ hdfs dfs -mkdir /tt mkdir: Permission denied: user=nosql, access=WRITE, inode="/":hadoop:supergroup:drwxr-xr-x |

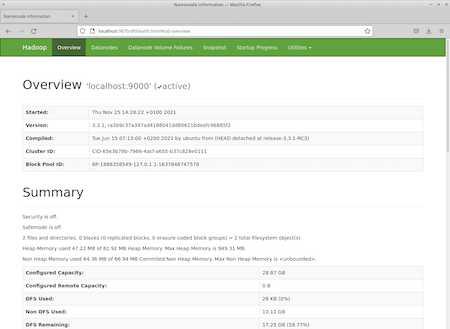

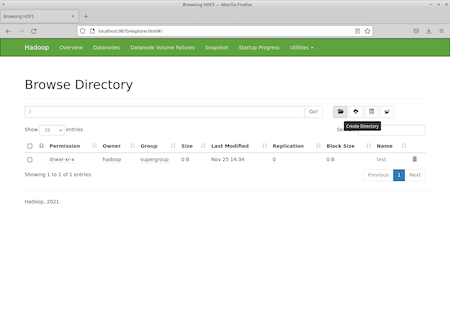

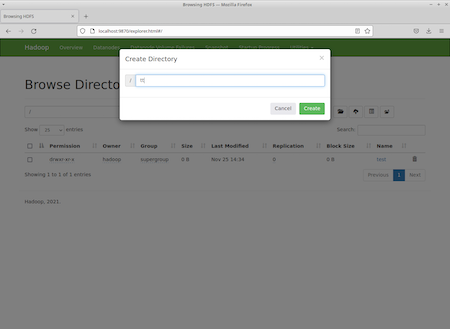

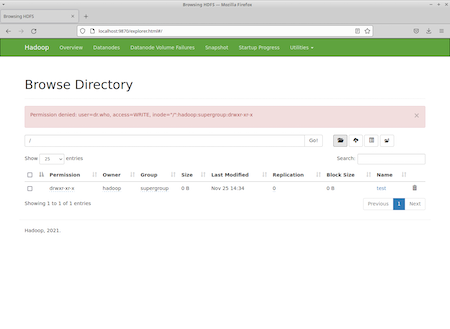

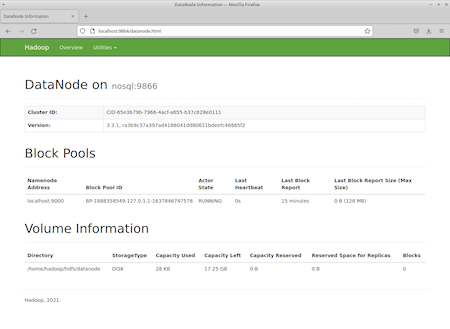

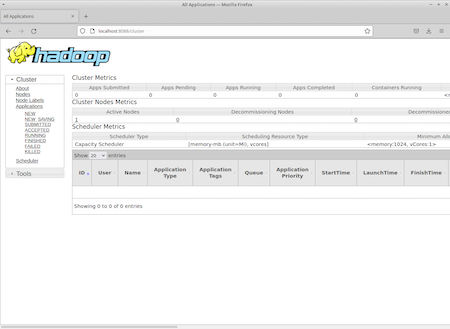

Access Hadoop Web Interface

- Access the Hadoop NameNode using the URL

http://localhost:9870. You will see the following screen:

- Access the individual DataNodes using the URL

http://localhost:9864. You will see the following screen:

- Access the YARN Resource Manager using the URL

http://localhost:8088. You will see the following screen:

Stop the Hadoop components

Again

nosql user is not allowed to do any action on Hadoop:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

nosql@nosql:~$ jps 6349 Jps nosql@nosql:~$ stop-yarn.sh Stopping nodemanagers localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts. localhost: nosql@localhost: Permission denied (publickey,password). Stopping resourcemanager nosql@nosql:~$ stop-dfs.sh Stopping namenodes on [localhost] localhost: nosql@localhost: Permission denied (publickey,password). Stopping datanodes localhost: nosql@localhost: Permission denied (publickey,password). Stopping secondary namenodes [nosql] nosql: Warning: Permanently added 'nosql' (ECDSA) to the list of known hosts. nosql: nosql@nosql: Permission denied (publickey,password). |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

hadoop@nosql:~$ jps 4596 NameNode 4870 SecondaryNameNode 5096 ResourceManager 5196 NodeManager 6748 Jps 4702 DataNode hadoop@nosql:~$ stop-yarn.sh Stopping nodemanagers Stopping resourcemanager hadoop@nosql:~$ stop-dfs.sh Stopping namenodes on [localhost] Stopping datanodes Stopping secondary namenodes [nosql] hadoop@nosql:~$ jps 7360 Jps |