In this part we cover the following topics

Unicode solveds some problems with text encodings -- namely: problems with manipulating a some number of different encodings at the same time, in the same document or software. Unifying all coding spaces into one universal was a very good step. Unfortunately numbers from Unicode codding space had to be somehow encoded to make it possible to manipulate Unicode strings on digital machines. UTF coding invented for this occasion, although effectively used in practice, raises a lot of problems for those who do not understand them.

Let's consider very basic use case: match two strings based on their hashes. This is very popular techniques allows save processing time or transform non-anonymous documents into anonymised one. And even this seemingly simple operation can be a source of serious problems.

Consider very simple Polish text "Ala ma kota" which means in English Alice has a cat (file string_001.txt, this text used to be one of the first sentence learned to read in school in Poland). We can code it with UTF-8, saved into a file and than compute MD5 checksum which should be 91162629d258a876ee994e9233b2ad87.

Consider the same text, also coded with UTF-8 but saved into a file with BOM (file string_001_bom.txt). It should be crearly stated that in both cases we see exactly the same sentence on the screen (in text editor for example). Compute MD5 checksum results with a599385d5405c236714eb4cface9420b which is different than previous one. If we have no priori information that second file uses BOM, this might be confusing. We may try to use some tools (for example http://w3c.github.io/xml-entities/unicode-names.html) to print character after character along with their unicode code points and names, but this also would not help because both results should be the same

|

1 2 3 4 5 6 7 8 9 10 11 |

U+0041 LATIN CAPITAL LETTER A A U+006c LATIN SMALL LETTER L l U+0061 LATIN SMALL LETTER A a U+0020 SPACE \space U+006d LATIN SMALL LETTER M m U+0061 LATIN SMALL LETTER A a U+0020 SPACE \space U+006b LATIN SMALL LETTER K k U+006f LATIN SMALL LETTER O o U+0074 LATIN SMALL LETTER T t U+0061 LATIN SMALL LETTER A a |

We have to look at the binary structure to notice the existence of additional bytes encoding BOM (EF BB BF) which affects the calculated checksum

| File | String (visible text) |

MD5 | Bytes (hexadecimaly) |

| string_001.txt | Ala ma kota | 91162629d258a876ee994e9233b2ad87 | 41 6C 61 20 6D 61 20 6B 6F 74 61 |

| string_001_bom.txt | Ala ma kota | a599385d5405c236714eb4cface9420b | EF BB BF 41 6C 61 20 6D 61 20 6B 6F 74 61 |

Another problem may be caused by some invisible (non printable) characters. Consider the same text, also coded with UTF-8 but saved into a file without BOM string_002.txt. There may be (un)intentionally added for example a ZERO WIDTH SPACE (U+200B). Although text looks exactly as before checksum is different a3e420667ba7ac602cd0b23fbc59d0a6. Looking at the binary structure allows us to notice an extra bytes E2 80 8B

| File | String (visible text) |

MD5 | Bytes (hexadecimaly) |

| string_001.txt | Ala ma kota | 91162629d258a876ee994e9233b2ad87 | 41 6C 61 20 6D 61 20 6B 6F 74 61 |

| string_001_bom.txt | Ala ma kota | a599385d5405c236714eb4cface9420b | EF BB BF 41 6C 61 20 6D 61 20 6B 6F 74 61 |

| string_002.txt | Ala ma kota | a3e420667ba7ac602cd0b23fbc59d0a6 | 41 6C 61 E2 80 8B 20 6D 61 20 6B 6F 74 61 |

Before we take any action on them (delete in hex editor for example), it's good to try identify for what they are used. With the help of http://w3c.github.io/xml-entities/unicode-names.html we can see an extra ZERO WIDTH SPACE character -- we can safely delete it in any text editor.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

U+0041 LATIN CAPITAL LETTER A A U+006c LATIN SMALL LETTER L l U+0061 LATIN SMALL LETTER A a U+200b ZERO WIDTH SPACE ​ ​ ​ ​ ​ U+0020 SPACE \space U+006d LATIN SMALL LETTER M m U+0061 LATIN SMALL LETTER A a U+0020 SPACE \space U+006b LATIN SMALL LETTER K k U+006f LATIN SMALL LETTER O o U+0074 LATIN SMALL LETTER T t U+0061 LATIN SMALL LETTER A a |

It shouldn't surprise us, that this text encoded in UTF-16 and saved with LE byte order will result in different MD5 checksum compared to saved with BE byte order

| File | String (visible text) |

MD5 | Bytes (hexadecimaly) |

| string_001.txt | Ala ma kota | 91162629d258a876ee994e9233b2ad87 | 41 6C 61 20 6D 61 20 6B 6F 74 61 |

| string_001_bom.txt | Ala ma kota | a599385d5405c236714eb4cface9420b | EF BB BF 41 6C 61 20 6D 61 20 6B 6F 74 61 |

| string_002.txt | Ala ma kota | a3e420667ba7ac602cd0b23fbc59d0a6 | 41 6C 61 E2 80 8B 20 6D 61 20 6B 6F 74 61 |

| string_003_16_be.txt | Ala ma kota | 7560048d24bfde987177e418ca451f3a | 00 41 00 6C 00 61 00 20 00 6D 00 61 00 20 00 6B 00 6F 00 74 00 61 |

| string_003_16_le.txt | Ala ma kota | 9f6d7d6ede0a538f356d8c1f15b4ca38 | 41 00 6C 00 61 00 20 00 6D 00 61 00 20 00 6B 00 6F 00 74 00 61 00 |

As we can see, computing any checksum on strings without the certainty that all of them are encoded with the same encoding and save with the same "method" (another encoding but different then previous) is useless and might lead to serious problems. The basis is therefore to ensure that two documents are saved using exactly the same encoding.

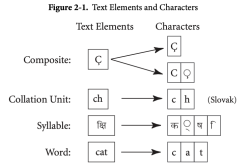

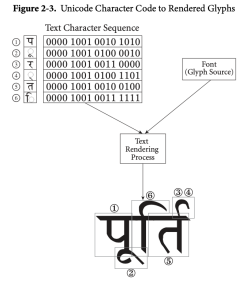

But this also does not solve the problems. Now we have to face with something really difficult. What does a character means? What users perceive as single characters? End users have various concepts about what constitutes a letter or character in the writing system for their language or languages. The precise scope of these end-user characters depends on the particular written language and the orthography it uses. In addition to the many instances of accented letters, they may extend to digraphs such as Slovak ch, trigraphs or longer combinations, and sequences. Despite this variety, however, the core concept of characters that should be kept together can be defined in a language-independent way. This core concept is known as a grapheme cluster, and it consists of any combining character sequence that contains only combining marks and any sequence of characters. Combining mark is a character that applies to the precedent base character to create a grapheme. A grapheme, or symbol, is a minimally distinctive unit of writing in the context of a particular writing system (we may call it a "letter" if it would help us to understand basics). A grapheme is how a user thinks about a character. A concrete image of a grapheme displayed on the screen is named glyph.

Those concepts are well illustrated by two examples from The Unicode® Standard Version 12.0 (local copy) (Figure 2.1 from page 11 and Figure 2.3 from page 17).

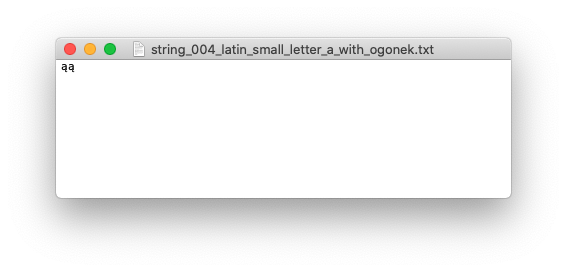

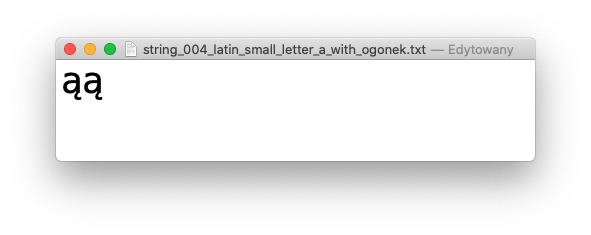

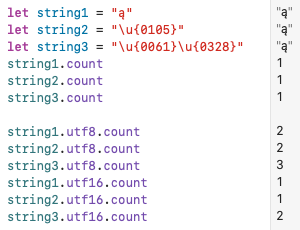

Notice that now we are talking only about Unicode code points totally ignoring how we "pack" this codes (numbers) into bytes. Next level of encoding, like UTF, would only add another level of complexity. Unicode is enough complex itself. For example Polish letter ą called LATIN SMALL LETTER A WITH OGONEK is defined by single value U+0105. It can be also written as the plain letter a (U+0061) called LATIN SMALL LETTER A, followed by ˛ (U+0328) called COMBINING OGONEK (do not confuse it with "pure" OGONEK) combining a diacritic hook placed under the lower right corner of a vowel. In both cases, what’s displayed is ą, and a user might have a reasonable expectation that both strings would not only be equal to each other but also have a length of one character, no matter which technique was used to produce the ą. Test made in Swift programming language confirm our reasoning (count returns the number of characters in a string)

Having UTF codes we can even prepare a file with two letters ą -- first as a single value, second combined -- saved in UTF-8.

| Encoding | Character | ||

| U+0105 LATIN SMALL LETTER A WITH OGONEK [1, 2] | U+0061 LATIN SMALL LETTER A [1, 2] | U+0328 COMBINING OGONEK [1, 2] | |

| UTF-8 | C4 85 | 61 | CC A8 |

| UTF-16BE | 01 05 | 00 61 | 03 28 |

| UTF-16LE | 05 01 | 61 00 | 28 03 |

| UTF-32BE | 00 00 01 05 | 00 00 00 61 | 00 00 03 28 |

| UTF-32LE | 05 01 00 00 | 61 00 00 00 | 28 03 00 00 |

This also should be correctly interpreted as it is shown in the picture below

Continuing this test we can examine UTF-8 and UTF-16 encoding.

Results

string3.utf8.count = 3 and string3.utf16.count = 2 at first sight can be confusing, but both can be easily explained. Unicode data can be encoded with many different widths of code unit -- in case of UTF-8 it is 8 bits and in case of UTF-16 it is 16 bits. Other words, when UTF-8 is used we count 8-bit packets (61 CC A8 -- first: 61, second: CC and third: A8) and when UTF-16 is used we count 16-bit packets (00 61 CC A8 -- first: 00 61 and second: CC A8).

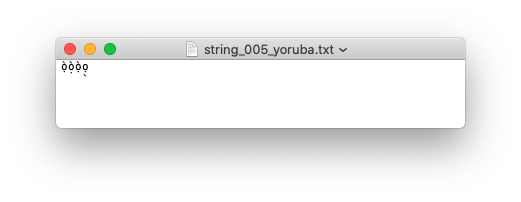

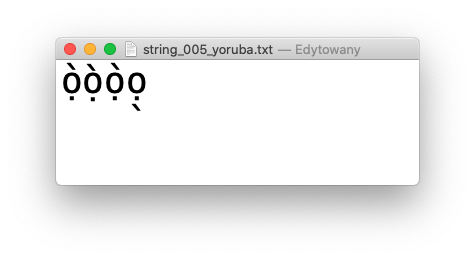

If you have a headache please have in mind that Polish letter ą is a "simple" case and ther are more complex characters. For example, Yoruba has the character ọ̀, which could be written three different ways: by composing ò with a dot, or by composing ọ with an grave, or by composing o with both an grave and a dot. And for that last one, the two diacritics can be in either order so these are all equal in human sense (as all are displayed as ọ́ "letter") but not in computer sense (as all are encoded different way)

In that case Swift (or XCode IDE) seems to have some problems

First two characters are displayed correctly while the next two are incorrect.

Encoding in UTF-8 (file with four letters ọ̀ stringY2-stringY5 as they are tested in Swift)

| Encoding | Character | ||||

| U+1ECD LATIN SMALL LETTER O WITH DOT BELOW [1, 2] | U+0300 COMBINING GRAVE ACCENT [1, 2] | U+00F2 LATIN SMALL LETTER O WITH GRAVE [1, 2] | U+0323 COMBINING DOT BELOW [1, 2] | U+006F LATIN SMALL LETTER O [1, 2] | |

| UTF-8 | E1 BB 8D | CC 80 | C3 B2 | CC A3 | 6F |

| UTF-16BE | 1E CD | 03 00 | 00 F2 | 03 23 | 00 6F |

| UTF-16LE | CD 1E | 00 03 | F2 00 | 23 03 | 6F 00 |

| UTF-32BE | 00 00 1E CD | 00 00 03 00 | 00 00 00 F2 | 00 00 03 23 | 00 00 00 6F |

| UTF-32LE | CD 1E 00 00 | 00 03 00 00 | F2 00 00 00 | 23 03 00 00 | 6F 00 00 00 |

and trying to display them in text editor also faces some problems as it is shown in the picture below

Unlike in the Swift case, first three characters are displayed correctly while the last one is incorrect. Notice also, that second character is slightly different than first and third.

Unicode defines characters used in spoken/written language, so it also defines graphical elements which are not typical linguistic characters but despite this are used in conversations -- a well known emoji. Some of them, like U+1F60A (😊) called SMILING FACE WITH SMILING EYES [1, 2] are coded with just one code point:

|

1 2 3 4 5 6 7 8 |

U+1F60A SMILING FACE WITH SMILING EYES Encoding hex UTF-8 F0 9F 98 8A UTF-16BE D8 3D DE 0A UTF-16LE 3D D8 0A DE UTF-32BE 00 01 F6 0A UTF-32LE 0A F6 01 00 |

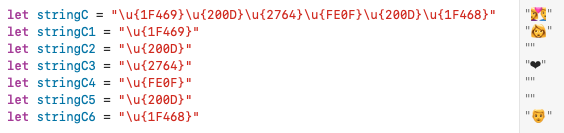

Today we may use a lot of emoji which are a "composed emoji" -- they are coded as a sequence of different code points. That is an example of graphem (and if displayed on the screen -- glyph) mentioned earlier -- a (one) symbol consisting of (many) characters that should be kept together.

For example the Couple With Heart: Woman, Man (👩❤️👨) emoji is a sequence of the U+1F469 WOMAN (👩) [1, 2], U+2764 HEAVY BLACK HEART (❤) [1, 2] followed by U+FE0F VARIATION SELECTOR-16 [1, 2] and U+1F468 MAN (👨) [1, 2] emojis combined using a U+200D ZERO WIDTH JOINER [1, 2] between each character. The final sequence is U+1F469, U+200D, U+2764, U+FE0F, U+200D and U+1F468.

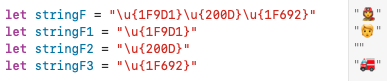

Some emoji are not displayed directly on combined glyph but rather are used as a moddifier for other emojis. Firefighter (🧑🚒) emoji is a sequence of the U+1F9D1 ADULT (🧑) [2] and U+1F692 FIRE ENGINE (🚒) [1, 2] emojis combined using a U+200D ZERO WIDTH JOINER [1, 2] between each character. The final sequence is U+1F9D1, U+200D, U+1F692.

Man Firefighter: Dark Skin Tone (👨🏿🚒) emoji is a sequence of the U+1F468 MAN (👨) [1, 2], U+1F3FF EMOJI MODIFIER FITZPATRICK TYPE-6 (🏿) [1, 2] and U+1F692 FIRE ENGINE (🚒) [1, 2] emojis combined using a U+200D ZERO WIDTH JOINER [1, 2] between last two characters. The final sequence is U+1F9D1, U+1F3FF, U+200D, U+1F692.

Taking into account above, it's highly probable that any "hand made" DIY "string" methods will sooner or later fail so please be very careful and use right tool in a right place.