Informations presented on this page may be incomplete or outdated. You can find the most up-to-date version reading my book NoSQL. Theory and examples

Informations presented on this page may be incomplete or outdated. You can find the most up-to-date version reading my book NoSQL. Theory and examplesIn this part we cover the following topics

We live in a data driven world and there are no premises that this situation will change in the nearest future. Now we are witnesses of something which can be called data revolution. As the industrial revolution has changed the way we think about production from being created essentially by hand in house to being created in assembly lines that are located in big factories so our data sources also has changed. Time ago all data was generated locally "in house". Now, data comes in from everywhere: our actions (visiting web pages, online shops, playing games , etc.), social networks, various sensors , etc. We can say, that situation is even worse: data becomes a perpetuum mobile: any set of data generates twice as much new data which generates twice as much new data which...

More data allows us to use more efficient and sophisticated algorithms. Better algorithms means that we can work better, faster, safer and our general satisfaction with the use of technology is increasing. The more people is satisfied with the technology they use, the more new users will it attract. More users generate more data. More data allows us to...

There are people who says: There is nothing more practical than good theory. I don't like this statement and instead say: There is nothing more theoretical than good practice. Hadoop is a good example of this: practical needs have led to the new computational paradigm and one of the best known type of the NoSQL databases: column family.

The story began when PageRank was developed in Google. Without getting into the details of which interested readers can read in a books or internet, one can say that PageRank allowed the relevance of a page to be weighted based on the number of links to that page instead of simply indexed on keywords within webpages. With this we are making implicit assumption that the more popular a page is the more links to that page can be found in the whole network. This seems to be reasonable: if I don't like someone's page I don't have a link to it and conversely, I have links to the pages I like. PageRank is a great example of a data-driven algorithm that leverages the collective intelligence (known also as wisdom of the crowd) and that can adapt intelligently as more data is available which is very close related with another trendy and popular term -- machine learning -- close related with big data which in turn is related with NoSQL.

Predicted large amount of data to be processed, PageRank required the use of non-standard solutions.

- Google decided to extensively use massively parallelizing and distributing processing across very large numbers of low budget servers.

- Instead of dedicated storage servers built and operated by other companies, storage based on disks directly attached to the same servers which perform data processing.

- Redefine unit of computation. No more individual servers. Instead the Google Modular Data Center were used. The Modular Data Center comprises shipping containers that house about a thousand custom-designed Intel based servers running Linux. Each module includes an independent power supply and air conditioning. Thus, data center capacity is increased not by adding new servers individually but by adding new modules with 1,000 servers each.

We have to emphasize here the most significant assumption made by Google: data collecting and processing are based on highly parallel and distributed technology. There was only one problem: such a technology didn't exist at the time.

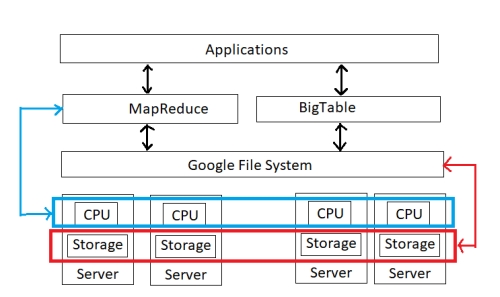

To solve this problem, Google developed its own uniques software stack. There were three major software layers to serve as the foundation for the Google platform.

- Google File System (GFS). GFS is a distributed cluster file system that allows all of the disks to be accessed as one massive, distributed, redundant file system.

- MapReduce. A distributed processing framework that allows run parallel algorithms across very large number of servers. Architecture assumes nodes' unreliability, and dealing with huge datasets. MapReduce is a framework for performing parallel processing on large datasets across multiple computers (nodes). In the MapReduce framework, the map operation has a master node which breaks up an operation into subparts and distributes each operation to another node for processing. The reduce is the process where the master node collects the results from the other nodes and combines them into the answer to the original problem.

In some sense MapReduce resembles divide and conquer approach in algorithmic which is a design paradigm based on multi-branched recursion. A divide and conquer algorithm works by recursively breaking down a problem into two or more sub-problems of the same or related type, until these become simple enough to be solved directly. The solutions to the sub-problems are then combined to give a solution to the original problem.

- BigTable. A database system that uses the GFS for storage. It means that this was highly distributed layer. From previous material we guess that it couldn't based on relational model.

In this architecture data which are going to be computed are divided into large blocks and distributes across nodes. Then packaged code is transferred into nodes to process the data in parallel. This approach takes advantage of data locality, where nodes manipulate the data they have access to. This allows the dataset to be processed faster and more efficiently than it would be in a more conventional supercomputer architecture that relies on a parallel file system where computation and data are distributed via high-speed networking.

Our point of interest in this tutorial is the last component: the database layer, so we will skip all the further information related to the previous two components.

Hadoop: Open-Source Google Stack

The Google stack as a directly available software wasn't generally exposed to the public. Fortunately details of it were published in a series of papers ([A1], [A2], [A3]). Based on the ideas described in these document, and having positively verified working examples (Google itself), an open source Apache project being an equivalent of Google stack, has been started. This project is known under the Hadoop name.

Hadoop's origins

In 2004, Doug Cutting and Mike Cafarella were working on an open-source project to build a web search engine called Nutch, which would be on top of Apache Lucene. Lucene was a Java-based text indexing and searching library. The Nutch team tend to use Lucene to build a scalable web search engine with the explicit intent of creating an open-source equivalent of the proprietary technologies used by Google.

Initial versions of Nutch could not demonstrate the required scalability allowing to index the entire web. A solution comes from the GFS and MapReduce thanks to Google's published papers (2003, 2004). Having an access to a proven architectural conception, the Nutch team implemented their own equivalents of Google's stack. Because MapReduce was tuned not only to web processing, it soon became clear that this paradigm can be used to a huge number of different massively data processing tasks. The resulting project, named Hadoop, was made available for download in 2007, and became a top-level Apache project in 2008.

In some sense Hadoop has become the de facto standard for massive unstructured data storage and processing. If you don't agree, say why giants such as IBM, Microsoft or Oracle offers Hadoop in their product set.

We can say that no any other project before has had as great influence on Big Data as Hadoop. The main reasons for this were (and still are)

- Accessibility Hadoop was free - everyone can download, install and work with it. Everyone can do this because we don't need superserver to host this software stack - we can install it even on a low budget laptop.

- Good level of abstraction GFS abstracts the storage contained in the nodes. MapReduce abstracts the processing power contained within these nodes. BigTable allows easy storage of almost everything. One week of self training is enough to start using this platform.

- Perfect scalability We can start very small company almost without any costs. Over time, as we become more and more recognizable, we can offer "more" without needs to architectural changes - we simply add another nodes to get more power. This allows many start-ups to offer good solutions based on a proven architectural conception.

Apache Hadoop's main components: MapReduce -- a processing part, and Hadoop Distributed File System (HDFS) -- a storage part were inspired by Google papers on their MapReduce and Google File System. Hadoop’s architecture roughly parallels that of Google. It consists of computer clusters built from commodity hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common situation and should be automatically handled by the framework.

The base Apache Hadoop framework is composed of the following modules:

- Hadoop Common – contains libraries and utilities needed by other Hadoop modules.

- Hadoop Distributed File System (HDFS) – a distributed file-system that stores data on commodity machines.

- Hadoop YARN – a platform responsible for managing computing resources in clusters and using them for scheduling users' applications.

- Hadoop MapReduce – an implementation of the MapReduce programming model for large-scale data processing.

Nowadays the term Hadoop refers not just to the base components, but rather to the whole ecosystem, or collection of additional software packages that can be installed on top of or alongside Hadoop:

- Apache Pig Apache Pig is a high-level platform for creating programs that runs on Apache Hadoop. The language used for this platform is called Pig Latin. Pig can execute its Hadoop jobs in MapReduce or Apache Spark. With Pig Latin we have one more layer in Hadoop stack abstracting the Java based MapReduce approach into a notation which allows high level programming with MapReduce.

Let's look in a basic example of Pig usage: word counting snippet

1234567input_lines = LOAD 'input_data.txt' AS (line:chararray);words = FOREACH input_lines GENERATE FLATTEN(TOKENIZE(line)) AS word;filtered_words = FILTER words BY word MATCHES '\\w+';word_groups = GROUP filtered_words BY word;word_count = FOREACH word_groups GENERATE COUNT(filtered_words) AS count, group AS word;ordered_word_count = ORDER word_count BY count DESC;STORE ordered_word_count INTO '/tmp/results.txt'; - Apache Hive Apache Hive is a data warehouse software project built on top of Apache Hadoop for providing data summarization, query, and analysis. With Hive we have one more layer in Hadoop stack abstracting the low-level Java API for making queries into an SQL-like query (HiveQL) interface. With this, since most data warehousing applications work with SQL-based querying languages, an SQL-based applications can be ported to Hadoop with less problems.

12345678DROP TABLE IF EXISTS docs;CREATE TABLE docs (line STRING);LOAD DATA INPATH 'input_data.txt' OVERWRITE INTO TABLE input_lines;CREATE TABLE word_counts ASSELECT word, count(1) AS count FROM(SELECT explode(split(line, '\s')) AS word FROM input_lines) tempGROUP BY wordORDER BY word;

- Apache HBase HBase is an open source, non relational (column-oriented key-value), distributed database modeled based on Google's Bigtable. It runs on top of HDFS (Hadoop Distributed File System), providing Bigtable-like capabilities for Hadoop. Tables in HBase can serve as the input and output for MapReduce jobs run in Hadoop, and may be accessed no only through the Java API but also through REST, Avro or Thrift gateway APIs.

- Apache Phoenix Apache Phoenix is an open source, massively parallel, relational database engine supporting online transaction processing (OLTP) for Hadoop using Apache HBase.

- Apache Spark Apache Spark is an open source cluster-computing framework. It requires a cluster manager and a distributed storage system. For cluster management, Spark supports, among others, Hadoop YARN. For distributed storage, Spark can interface with a wide variety, including Hadoop Distributed File System (HDFS).

- Apache ZooKeeper Apache ZooKeeper (Chubby in Google's BigTable) is a software project of the Apache Software Foundation. It is essentially a distributed hierarchical key-value store, which is used to provide a distributed configuration service, synchronization service, and naming registry for large distributed systems.

- Apache Flume Apache Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data.

- Apache Sqoop Apache Sqoop is a command-line interface application for transferring data between relational databases and Hadoop.

- Apache Storm Apache Storm is an open source distributed stream processing computation framework.

Although the Hadoop framework itself is mostly written in the Java programming language, and this might be a most natural choice when some new software is created, there are many other languages wich can be used. The most popular are: Python and Scala.

As mentioned earlier, Google published three key papers revealing the architecture of their platform. The GFS and MapReduce papers served as the basis for the core Hadoop architecture. The third paper describing BigTable served as the basis for one of Hadoop components and the first NoSQL database systems: HBase.

HBase uses Hadoop HDFS as a file system in the same way that most traditional relational databases use the operating system file system. By using Hadoop HDFS as its file system HBase gets for free the following features.

- HBase is able to create tables containing very huge amounts of data. Much bigger than is able to be stored in relational databases.(?)

- HBase need not store multiple copies of data to protect against data loss thanks to the fault tolerance of HDFS.

Although HBase is a schema free database (like all NoSQL databases) it does enforce some kind of structure on the data. This structure is very basic and is based on well known terms: columns, rows, tables and keys. However, HBase tables vary significantly from the relational tables with which we are familiar.

To get a sense how the HBase data model works we describe two concepts:

- aggregation oriented model,

- sparse data.

Data aggregation is the compiling of information from databases with intent to prepare combined datasets for data processing.

In database management an aggregate function is a function where the values of multiple rows are grouped together to form a single value of more significant meaning or measurement such as a set, a bag or a list.

Common aggregate functions include: average, count, maximum, sum.

To explain this concept we will recall our Star Wars based example with orders products from the Space Universe store.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

Table: invoice Invoice Customer number number ================= 1 10 2 30 3 20 4 30 Table: customer_details Customer Customer Customer number name location ================================== 10 Dart Vader Star Destroyer 20 Luke Skywalker Naboo 30 C3PO Tatooine Table: invoice_details Invoice Invoice Item Item Item number item name quantity price ================================================ 1 1 lightsaber 1 100 1 2 black cloak 2 50 1 3 air filter 10 2 2 1 battery 1 25 3 1 lightsaber 5 75 3 2 belt 1 5 4 1 wires 1 10 |

In a relational model, each table field must contain basic (atomic) information (then the table is in the first normal form). This excludes, for example, the nesting of tuples inside other tuples. Even tuples as such should be eliminated. This is a bad model for clustering. There is a natural unit for replication and sharing: aggregates.

Note that, aggregated data-oriented databases are also application oriented, as programmers typically handle aggregation-oriented data. In most cases we don't want, for example, atomic data about order, client or invoice to combine them in one object in our application. Better option is to get object we need directly from our database system. In some sense this is what ORM's do: maps set of objects into one object suitable for direct processing in our application. Aggregated database models are: key-value databases, document databases, column families stores.

The key-value database can store any data, they are "transparent" to the database (the database does not "see" their structure). It can only impose limitations on data size. A document databases are more restrictive: there are some limitation concerning structures we can use as well as data types.

In a key-value database, access to aggregation can only be obtained through the aggregation key. You cannot perform operations on aggregation parts here. A document databases are more flexible. We can perform database operations based on the aggregation part.

If we want to present our Star Wars based example in an aggregation-oriented version, we must first determine the aggregation boundaries. These limits need to be determined taking into account what data will most often be collected together. If we use the data most often only for order processing, we can put everything in one "orders" aggregation. In this case this structure written in JSON format looks like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 |

// orders aggregation { "Invoice number" : 1, "Invoice details" : [ {"Item name" : "lightsaber", "Item quantity" : 1, "Item price" : 100}, {"Item name" : "black cloak", "Item quantity" : 2, "Item price" : 50}, {"Item name" : "air filter", "Item quantity" : 10, "Item price" : 2} ], "Customer details" : { "Customer name" : "Dart Vader" "Customer location" : "Star Destroyer" } }, { "Invoice number" : 2, "Invoice details" : [ {"Item name" : "battery", "Item quantity" : 1, "Item price" : 25} ], "Customer details" : { "Customer name" : "C3PO" "Customer location" : "Tatooine" } }, { "Invoice number" : 3, "Invoice details" : [ {"Item name" : "lightsaber", "Item quantity" : 5, "Item price" : 75}, {"Item name" : "belt", "Item quantity" : 1, "Item price" : 5} ], "Customer details" : { "Customer name" : "Luke Skywalker" "Customer location" : "Naboo" } }, { "Invoice number" : 4, "Invoice details" : [ {"Item name" : "wires", "Item quantity" : 1, "Item price" : 10} ], "Customer details" : { "Customer name" : "C3PO" "Customer location" : "Tatooine" } } |

If the most commonly use case is sales of an items analysis, it might be better to create two aggregates "customers" and "orders".

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

// customers aggregation { "Customer number" : 10, "Customer name" : "Dart Vader", "Customer location" : "Star Destroyer" }, { "Customer number" : 20, "Customer name" : "Luke Skywalker", "Customer location" : "Naboo" }, { "Customer number" : 30, "Customer name" : "C3PO", "Customer location" : "Tatooine" } // orders aggregation { "Customer number" : 10, "Invoice number" : 1, "Invoice details" : [ {"Item name" : "lightsaber", "Item quantity" : 1, "Item price" : 100}, {"Item name" : "black cloak", "Item quantity" : 2, "Item price" : 50}, {"Item name" : "air filter", "Item quantity" : 10, "Item price" : 2} ] }, { "Customer number" : 30, "Invoice number" : 2, "Invoice details" : [ {"Item name" : "battery", "Item quantity" : 1, "Item price" : 25} ] }, { "Customer number" : 20, "Invoice number" : 3, "Invoice details" : [ {"Item name" : "lightsaber", "Item quantity" : 5, "Item price" : 75}, {"Item name" : "belt", "Item quantity" : 1, "Item price" : 5} ] }, { "Customer number" : 30, "Invoice number" : 4, "Invoice details" : [ {"Item name" : "wires", "Item quantity" : 1, "Item price" : 10} ] } |

What’s typical about aggregation-oriented data representation is that if we look them in a tabular form, we would see that only a few cells contains data. This case is similar to sparse matrix which is a grid of values where only a small percent of cells contain values.

If we will show our orders aggregation from previous section in tabular form it will be like this:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

| Customer details | Invoice details | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ Invoice| Customer | Customer | lightsaber | lightsaber | black cloak | black cloak | air filter | air filter | belt | belt | battery | battery | wires | wires | number | name | location | | price | | price | | price | | price | | price | | price | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ 1 |Dart Vader|Star | 1 | 100 | 2 | 50 | 10 | 2 | | | | | | | | |Destroyer | | | | | | | | | | | | | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ 2 |C3PO |Tatooine | | | | | | | | | 1 | 25 | | | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ 3 |Luke |Naboo | 5 | 75 | | | | | 1 | 5 | | | | | |Skywalker | | | | | | | | | | | | | | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ 4 |C3PO |Tatooine | | | | | | | | | | | 1 |10 | -------+----------+----------+------------+------------+-------------+-------------+------------+------------+------+-------+---------+---------+-------+-------+ |

As we can see in this trivial example in Invoice details section we have 4 x 12 = 48 cells but only 14 of them have some values. The more items we will offer the more columns we will have but the same time less cells will have some values because people will buy just a few things from a whole assortment. This is how we get a sparse data.

Column family systems are important NoSQL data architecture patterns because they can scale to manage large volumes of data.

A general idea behind column families data model is to support frequent access to data used together. Column families for a single row may or may not be near each other when stored on disk, but columns within a one column family are kept together. This is a fundamental element supporting (or supported) by aggregations. It's true, that HBase is a schema free database, so we can store what we want, when we want (we don't have to specify values for all columns as well as we don't have to specify types of our data) and how we want (no need to create tables and columns ahead) but one thing should be decided before we start our database: column families.

The BigTable database, in terms of structure, is completely different from relational databases. It is a fixed (persistent) multidimensional ordered map. Column family stores use row and column identifiers as general purposes keys for data lookup in this map. More precisely map is indexed by four keys/names: row keys, column families, column keys, and time stamps (the latter defines different versions of the same data). All values in this map appear as unmanaged character tables (their interpretation, such as data type, is an application task).

In an example below we will shows an example of such a map saved in JSON format. We can see that BigTable organizes data as maps nested in other maps. Within each map, the data is physically sorted by the keys of the map.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

{ "fulmanp" : { ----------------- row key "info" : { ---------------- column family "email" : { ----------- column name t1: fulmanp@math.uni.lodz.pl }, "name" : { t1: Piotr }, "phone" : { t1: 123456 -------- versions (indexed by timestamps) t4: 456789 }, "surname" : { t1: Fulmański } } }, "radmat" : { "info" : { "email" : { t2: radmat@math.uni.lodz.pl }, "name" : { t2: Radosław }, "phone" : { t2: 234567 }, "surname" : { t2: Matusik }, } }, "wojczyk" : { "info" : { "email" : { t3: wojczyk@math.uni.lodz.pl }, "name" : { t3: Sebastian }, "phone" : { t3: 345678 }, "surname" : { t3: Wojczyk } } } } |

The basic unit of storing information is the row identified by a key. A row key usage allows access to the associated columns (also identified by theirs keys). The row key is a string of arbitrary characters from a typical length of 10 to 100 bytes (the maximum length is 64KiB). Saving and reading rows is an "atomic" (indivisible) operation regardless of the number of columns we read or write to. BigTable stores rows structured lexicographically according to theirs keys.

Rows in the table are dynamically partitioned (divided into smaller subsets). Each of these subset (row ranges), called a tablet, determines a distribution unit in a cluster. Wise use of the tablet(s) helps to balance the database load. Readings from small row ranges are more efficient, because they typically require communication between fewer machines, in the best case we use data from one machine.

Columns. The rows consists of columns. Each row may have a different number of columns storing different data types (including compound types).

The column keys are grouped into sets called column families. They are the basic unit when accessing data. Data stored in a given family is usually of the same type. Column families must be created before starting data placement. Once created, you cannot use a column key that does not belong to any family. The number of different column families in the table should not exceed 100 and should be rarely changed at work (their change requires a database stop and restart). The number of columns is not limited. They can be added and removed while the database is running.

There may be different versions of the same cell in the BigTable database identified by timestamp. For this reason, it is necessary to clearly label these versions. Therefore every cell contains a time stamp. It can be assigned by the BigTable database engine (in this case, the real time expressed in microseconds), or by client applications. Different versions of cells are stored in the descending order of the date. This is because the latest versions can be read first. There are two options to not store to many versions and automatically remove older data: keep the last $n$ versions, or for example versions from some time period (eg the last week).

From the above set of information we can infer that, the way to access a cell in a BigTable table is four-dimensional. To access a particular table cell, we enter the following four keys: row key, column family name, column name, time stamp.

|

1 2 3 4 5 6 7 8 9 10 11 |

row |--- Column family: info ---| key | Column 1 Column 2 Column 3 Column 4 | key key key key | | | | | | name surname email phone | | | | | fulmanp t1: Piotr t1: Fulmański t1: fulmanp@math.uni.lodz.pl t1: 123456 --- version t4: 456789 indexed radmat t2:Radosław t2:Matusik t2: radmat@math.uni.lodz.pl t2: 234567 by timestamp (t1) wojczyk t3:Sebastian t3:Wojczyk t3: wojczyk@math.uni.lodz.pl t3: 345678 |

We may use one set of four keys (row key, column family name, column name, time stamp) to specify one specific cell version.

|

1 2 3 |

compound key value (version) | | [fulmanp, info, phone, t1] -> 123456 |

Using a set of three keys (row key, column family name, column name) we specify all versions of a given cell.

|

1 2 |

[fulmanp, info, phone] -> t1: 123456 t4: 456789 |

Using a set of two keys (row key, column family name), we specify all cells in a given column family.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

[fulmanp, info] -> { "email" : { t1: fulmanp@math.uni.lodz.pl }, "name" : { t1: Piotr }, "phone" : { t1: 123456 t4: 456789 }, "surname" : { t1: Fulmański } } |

Using a row key we specify all columns from all column families.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

[fulmanp] -> { "info" : { "email" : { t1: fulmanp@math.uni.lodz.pl }, "name" : { t1: Piotr }, "phone" : { t1: 123456 t4: 456789 }, "surname" : { t1: Fulmański } } } |

Technically HBase is written in Java, works on top of Hadoop, and relies on ZooKeeper. A HBase cluster can be set up in either local or distributed mode. Distributed mode can further be either pseudo distributed or fully distributed mode.

HBase needs the Java Runtime Environment (JRE) to be installed and available on the system so this would be our first step. Oracle’s Java is the recommended package for use in production systems.

This section describes the setup of a single node standalone HBase. To run HBase in the standalone mode, we don’t need to do a lot. A standalone instance has all HBase components -- the Master, RegionServers, and ZooKeeper -- running in a single JVM saving data to the local filesystem.

Perform the following steps for installing Java

- We are going to use HBase in version 2.0 and this, as for now, supports Java 8.

1234567891011nosql@nosql-virtual-machine:~$ sudo add-apt-repository ppa:webupd8team/javanosql@nosql-virtual-machine:~$ sudo apt updatenosql@nosql-virtual-machine:~$ sudo apt install oracle-java8-installer[While the install process, you have to accept Java license to continue downloading & installing Java binaries.]nosql@nosql-virtual-machine:~$ sudo apt install oracle-java8-set-defaultnosql@nosql-virtual-machine:~$ java -versionjava version "1.8.0_151"Java(TM) SE Runtime Environment (build 1.8.0_151-b12)Java HotSpot(TM) 64-Bit Server VM (build 25.151-b12, mixed mode) - We are required to set the

JAVA_HOMEenvironment variable before starting HBase. We can set the variable via an operating system’s usual mechanism as we do it now or use HBase mechanism and edit conf/hbase-env.sh file as we do in further section.

12345678910111213141516171819202122232425nosql@nosql-virtual-machine:~$ which java/usr/bin/javanosql@nosql-virtual-machine:~$ ls -l /usr/bin/javalrwxrwxrwx 1 root root 22 lis 8 15:06 /usr/bin/java -> /etc/alternatives/javanosql@nosql-virtual-machine:~$ ls -l /etc/alternatives/javalrwxrwxrwx 1 root root 39 lis 8 15:06 /etc/alternatives/java -> /usr/lib/jvm/java-8-oracle/jre/bin/javanosql@nosql-virtual-machine:~$ ls -l /usr/lib/jvm/java-8-oracle/total 25952drwxr-xr-x 2 root root 4096 lis 8 15:06 bin-r--r--r-- 1 root root 3244 lis 8 15:06 COPYRIGHTdrwxr-xr-x 4 root root 4096 lis 8 15:06 dbdrwxr-xr-x 3 root root 4096 lis 8 15:06 include-rwxr-xr-x 1 root root 5200672 lis 8 15:06 javafx-src.zipdrwxr-xr-x 5 root root 4096 lis 8 15:06 jredrwxr-xr-x 5 root root 4096 lis 8 15:06 lib-r--r--r-- 1 root root 40 lis 8 15:06 LICENSEdrwxr-xr-x 4 root root 4096 lis 8 15:06 man-r--r--r-- 1 root root 159 lis 8 15:06 README.html-rw-r--r-- 1 root root 526 lis 8 15:06 release-rw-r--r-- 1 root root 21115360 lis 8 15:06 src.zip-rwxr-xr-x 1 root root 63933 lis 8 15:06 THIRDPARTYLICENSEREADME-JAVAFX.txt-r--r--r-- 1 root root 145180 lis 8 15:06 THIRDPARTYLICENSEREADME.txtroot@nosql-virtual-machine:~# echo "export JAVA_HOME=/usr" >> /etc/profileroot@nosql-virtual-machine:~# echo $JAVA_HOME/usr/lib/jvm/java-8-oracle

- Download the HBase binary. Choose a download site from this list of Apache Download Mirrors. Click on the suggested top link. This will take us to a mirror of HBase Releases. Click on the folder named stable and then download the binary file (.tar.gz). In our case this is hbase-1.2.6-bin.tar.gz (about 100MiB) file dated on 2017-06-20 12:42.

- Extract the downloaded file,

1nosql@nosql-virtual-machine:~/Desktop/downloads$ tar -zxvf hbase-1.2.6-bin.tar.gz

and change your working directory to the just-created

12nosql@nosql-virtual-machine:~/Desktop/downloads$ cd hbase-1.2.6/nosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6$ - As we have stated in a previous section, we are required to set the JAVA_HOME environment variable before starting HBase. We can do this either via an operating system’s usual mechanism or use HBase mechanism and edit conf/hbase-env.sh file as we do now. Edit this file, uncomment the line starting with JAVA_HOME, and set it to the appropriate location for our operating system -- it should be set to a directory which contains the executable file bin/java. Because most Linux operating systems provide a mechanism for transparently switching between versions of executables such as Java (see /usr/bin/alternatives lines in previous section) we can set JAVA_HOME to the directory containing the symbolic link to bin/java, which is usually /usr.

In our case lines

12# The java implementation to use. Java 1.7+ required.# export JAVA_HOME=/usr/java/jdk1.6.0/were replaced by

12# The java implementation to use. Java 1.7+ required.export JAVA_HOME=/usr - Edit conf/hbase-site.xml, which is the main HBase configuration file. Working in the standalone mode we only need to specify the directory on the local filesystem where HBase and ZooKeeper write data. By default, a new directory is created under /tmp which may not be what we want because this is temporary directory and its contents is... temporary and may be deleted. The following configuration will store HBase’s data in the HBase directory, in the home directory of the user called nosql.

12345678910<configuration><property><name>hbase.rootdir</name><value>file:///home/nosql/hbase</value></property><property><name>hbase.zookeeper.property.dataDir</name><value>/home/nosql/zookeeper</value></property></configuration>

- Almost done. The HBase installation provides a convenient way to start it via the bin/start-hbase.sh After issuing the command, if all goes well, a message is logged to standard output showing that HBase started successfully. We should verify this with the jps command: there should be only one running process called HMaster. In standalone mode HBase runs all daemons (HMaster, HRegionServer, ZooKeeper) within this single JVM.

HBase also comes with a preinstalled web-based management console that can be accessed using http://localhost:60010. By default, it is deployed on HBase's Master host at port 60010. This UI provides information about various components such as region servers, tables, running tasks, logs, and so on.

1234567nosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6$ bin/start-hbase.shstarting master, logging to /home/nosql/Desktop/downloads/hbase-1.2.6/bin/../logs/hbase-nosql-master-nosql-virtual-machine.outJava HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0nosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6$ jps46132 HMaster46458 Jps

- Connect to HBase

1234567osql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6/bin$ ./hbase shell2017-11-08 23:58:11,056 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableHBase Shell; enter 'help<RETURN>' for list of supported commands.Type "exit<RETURN>" to leave the HBase ShellVersion 1.2.6, rUnknown, Mon May 29 02:25:32 CDT 2017hbase(main):001:0> - Get HBase status

12hbase(main):001:0> status1 active master, 0 backup masters, 1 servers, 1 dead, 2.0000 average load - Create a table

12345678hbase(main):003:0> create 'tab1', 'cf1'0 row(s) in 1.3710 seconds=> Hbase::Table - tab1hbase(main):004:0> create 'tab2', 'cf1', 'cf2'0 row(s) in 1.2480 seconds=> Hbase::Table - tab2 - List information about tables

1234567hbase(main):020:0> listTABLEtab1tab22 row(s) in 0.0320 seconds=> ["tab1", "tab2"] - Describes the metadata information about tables

1234567891011121314151617181920hbase(main):001:0> describe 'tab1'Table tab1 is ENABLEDtab1COLUMN FAMILIES DESCRIPTION{NAME => 'cf1', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}1 row(s) in 0.5440 secondshbase(main):002:0> describe 'tab2'Table tab2 is ENABLEDtab2COLUMN FAMILIES DESCRIPTION{NAME => 'cf1', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}{NAME => 'cf2', BLOOMFILTER => 'ROW', VERSIONS => '1', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}2 row(s) in 0.0310 seconds - Put data into our table

1234567891011121314hbase(main):003:0> put 'tab1', 'row-001', 'cf1:a', 'First value'0 row(s) in 0.1280 secondshbase(main):004:0> put 'tab1', 'row-001', 'cf1:b', 1230 row(s) in 0.0300 secondshbase(main):005:0> put 'tab1', 'row-001', 'cf1:c', 123.4560 row(s) in 0.0080 secondshbase(main):006:0> put 'tab1', 'row-001', 'cf1:d', 4560 row(s) in 0.0210 secondshbase(main):007:0> put 'tab1', 'row-001', 'cf1:d', 'Replacement'0 row(s) in 0.0150 seconds - Read data by row

1234567hbase(main):009:0> get 'tab1', 'row-001'COLUMN CELLcf1:a timestamp=1510182483121, value=First valuecf1:b timestamp=1510182504781, value=123cf1:c timestamp=1510182511625, value=123.456cf1:d timestamp=1510182537839, value=Replacement4 row(s) in 0.0500 seconds1234hbase(main):010:0> get 'tab1', 'row-001', {COLUMN => 'cf1:d', VERSIONS => 3}COLUMN CELLcf1:d timestamp=1510182537839, value=Replacement1 row(s) in 0.0220 seconds123456789101112131415161718192021222324hbase(main):012:0> create 'tab3',{NAME=>"cf1", VERSIONS=>5}0 row(s) in 1.3510 seconds=> Hbase::Table - tab3hbase(main):013:0> describe 'tab3'Table tab3 is ENABLEDtab3COLUMN FAMILIES DESCRIPTION{NAME => 'cf1', BLOOMFILTER => 'ROW', VERSIONS => '5', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}1 row(s) in 0.0480 secondshbase(main):014:0> put 'tab3', 'row-001', 'cf1:a', 1230 row(s) in 0.0320 secondshbase(main):015:0> put 'tab3', 'row-001', 'cf1:a', 123.4560 row(s) in 0.0240 secondshbase(main):016:0> get 'tab3', 'row-001', {COLUMN => 'cf1:a', VERSIONS => 3}COLUMN CELLcf1:a timestamp=1510183532925, value=123.456cf1:a timestamp=1510183529338, value=1232 row(s) in 0.0330 seconds - Read all data

123456789101112131415161718hbase(main):001:0> scan 'tab1'ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510182483121, value=First valuerow-001 column=cf1:b, timestamp=1510182504781, value=123row-001 column=cf1:c, timestamp=1510182511625, value=123.456row-001 column=cf1:d, timestamp=1510182537839, value=Replacement1 row(s) in 0.5110 secondshbase(main):002:0> scan 'tab3'ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510183532925, value=123.4561 row(s) in 0.0410 secondshbase(main):003:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510183532925, value=123.456row-001 column=cf1:a, timestamp=1510183529338, value=1231 row(s) in 0.0440 seconds

- Delete data from one cell

123456789hbase(main):004:0> delete 'tab1', 'row-001', 'cf1:d'0 row(s) in 0.1030 secondshbase(main):005:0> scan 'tab1'ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510182483121, value=First valuerow-001 column=cf1:b, timestamp=1510182504781, value=123row-001 column=cf1:c, timestamp=1510182511625, value=123.4561 row(s) in 0.0400 seconds123456hbase(main):012:0> delete 'tab3', 'row-001', 'cf1:a'0 row(s) in 0.0050 secondshbase(main):013:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELL0 row(s) in 0.0100 seconds1234567891011121314151617181920212223242526272829303132333435363738hbase(main):001:0> put 'tab3', 'row-001', 'cf1:a', 'abc'0 row(s) in 0.5460 secondshbase(main):002:0> put 'tab3', 'row-001', 'cf1:a', 'def'0 row(s) in 0.0040 secondshbase(main):003:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510184160905, value=defrow-001 column=cf1:a, timestamp=1510184156304, value=abc1 row(s) in 0.0730 secondshbase(main):005:0> delete 'tab3', 'row-001', 'cf1:a', 15101841609050 row(s) in 0.0240 secondshbase(main):006:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELL0 row(s) in 0.0190 secondshbase(main):007:0> put 'tab3', 'row-001', 'cf1:a', 'abc'0 row(s) in 0.0270 secondshbase(main):008:0> put 'tab3', 'row-001', 'cf1:a', 'def'0 row(s) in 0.0100 secondshbase(main):009:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510184447024, value=defrow-001 column=cf1:a, timestamp=1510184441361, value=abc1 row(s) in 0.0320 secondshbase(main):010:0> delete 'tab3', 'row-001', 'cf1:a', 15101844413610 row(s) in 0.0080 secondshbase(main):011:0> scan 'tab3', VERSIONS=>3ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510184447024, value=def1 row(s) in 0.0150 seconds

- Delete data from one row

12345678910111213hbase(main):012:0> scan 'tab1'ROW COLUMN+CELLrow-001 column=cf1:a, timestamp=1510182483121, value=First valuerow-001 column=cf1:b, timestamp=1510182504781, value=123row-001 column=cf1:c, timestamp=1510182511625, value=123.4561 row(s) in 0.0510 secondshbase(main):013:0> deleteall 'tab1', 'row-001'0 row(s) in 0.0110 secondshbase(main):014:0> scan 'tab1'ROW COLUMN+CELL0 row(s) in 0.0100 seconds - Make changes to an existing table

1234567891011121314151617181920212223242526hbase(main):015:0> alter 'tab1', NAME=>'cf1', VERSIONS=>5Updating all regions with the new schema...1/1 regions updated.Done.0 row(s) in 2.1190 secondshbase(main):016:0> describe 'tab1'Table tab1 is ENABLEDtab1COLUMN FAMILIES DESCRIPTION{NAME => 'cf1', BLOOMFILTER => 'ROW', VERSIONS => '5', IN_MEMORY => 'false', KEEP_DELETED_CELLS => 'FALSE', DATA_BLOCK_ENCODING => 'NONE', TTL => 'FOREVER', COMPRESSION => 'NONE', MIN_VERSIONS => '0', BLOCKCACHE => 'true', BLOCKSIZE => '65536', REPLICATION_SCOPE => '0'}1 row(s) in 0.0500 secondshbase(main):017:0> put 'tab1', 'row-001', 'cf1:d', 4560 row(s) in 0.0190 secondshbase(main):018:0> put 'tab1', 'row-001', 'cf1:d', 'Replacement'0 row(s) in 0.0090 secondshbase(main):019:0> scan 'tab1', VERSIONS=>3ROW COLUMN+CELLrow-001 column=cf1:d, timestamp=1510185095846, value=Replacementrow-001 column=cf1:d, timestamp=1510185082718, value=4561 row(s) in 0.0270 seconds - Disable and enable a table. If we want to delete a table or change its settings, as well as in some other situations, we need to disable the table first, using the disable command. We can re-enable it using the enable command.

12345hbase(main):021:0> disable 'tab3'0 row(s) in 2.3520 secondshbase(main):023:0> enable 'tab3'0 row(s) in 1.2750 seconds - Delete the table

123456789101112131415161718192021222324252627282930hbase(main):022:0> listTABLEtab1tab2tab33 row(s) in 0.0130 seconds=> ["tab1", "tab2", "tab3"]hbase(main):024:0> disable 'tab3'0 row(s) in 2.2570 secondshbase(main):025:0> drop 'tab3'0 row(s) in 1.2970 secondshbase(main):026:0> listTABLEtab1tab22 row(s) in 0.0190 seconds=> ["tab1", "tab2"]hbase(main):027:0> drop 'tab1'ERROR: Table tab1 is enabled. Disable it first.Here is some help for this command:Drop the named table. Table must first be disabled:hbase> drop 't1'hbase> drop 'ns1:t1' - Exit the HBase Shell

12hbase(main):028:0> exitnosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6/bin$ - Stop HBase

1234nosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6/bin$ ./stop-hbase.shstopping hbase...................nosql@nosql-virtual-machine:~/Desktop/downloads/hbase-1.2.6/bin$ jps46732 Jps

Note that after issuing the command, it can take several minutes for the processes to shut down. That's why use the jps to be sure that the HMaster and all other components are shut down.